As I got ready for this week at VMworld – something hit me.

Virtual Geek readers know what that means. A long, meandering stream of consciousness blog post. Interesting for some, mind-numbingly strange for others.

For those that don’t like the sound of that, STOP. For those that do, grab a bag of popcorn, a glass of wine/beer/water (your choice), make a mental commitment to give me 30 minutes, and read on!

My subconscious is always working furiously looking for patterns, and then building analogies and stories that tell the story of the pattern, and make it real.

I’m lucky to be in a role where I see a LOT. A LOT of customers. A LOT of partners. A LOT of engineering teams and roadmaps. It’s all fodder for my pattern recognition engine :-)

I’ve also realized there is a bit of a theme this VMworld – at least for the stuff I’m working on, and the customers I see – a “meta” that ties things together.

It’s the forces of “DIY” vs. “Consumption” approaches to IT.

I’ve talked about it as a “build to buy” continuum – but I hate to say it – there’s a flaw in the picture, an error in my earlier taxonomy.

The error is that there’s a “builder” and a “consumer” approach – at every layer in the stack.

Let me start with a story.

In early August, I got a call from the UK Dell EMC and VMware teams to jump on a call with a customer. They were on the verge of making a big “DIY” vs. “Consume” decision. It would be for a radical simplification of their 3 data centers, and represented 126 total VxRail appliances. Every customer is precious, and for this customer this was a massive decision. However, the solution was about a 30% premium vs. picking Dell EMC PowerEdge servers from the VMware vSAN Ready node HCL – and the customer was struggling with the decision.

This is pretty common. It’s the DIY vs. Consume trade-off. It’s also something humans that tend to be “builders” or humans focused on their piece of the stack (examples I tend to see this with a lot: VMware vSAN specialists, Dell EMC PowerEdge specialists) scratch their heads over.

We jump on the call. I ask the customer how their testing with vSAN and PowerEdge had been going. Answer was NOT well. For 6 months, they have been working to test, validate, work through various small issues, tweaking and tuning. I asked them how their VxRail testing had been going. Answer was well so far. I asked them what their priorities were. Answer was speed, outcome, and since this was for their core 2 datacenters in a stretched configuration and a 3rd DR site – they needed a single throat to choke, one accountable party. I asked them about the people doing the testing of the DIY – were they stupid, their retreads? Or were they some of the smartest people at the customer, in the local VMware, Dell EMC teams? Answer: some of their best. BTW - This is almost always the case. The obvious question – putting aside all the value-added elements like built-in DR (every VxRail includes $35K worth of included Data Protection), or cloud storage, or integrated lifecycle management…. the obvious question was what value do they assign to 6 months of work for their smartest people – resulting in still no outcome? Silence as they thought about it for the first time.

This is the crux of the DIY vs. Consume choice.

Some people simply refuse to put a value on that integration, testing work.

Most vSphere customers have become accustomed to taking the 6 month period between a major release (6.5) and the subsequent u1 release (6.5u1) and using that time to have some of their smartest people select hardware, build test clusters, integrate with their Data Protection, DR, and other automation tooling. The people doing the work hate it, but secretly kind of love it – because they are DIY people at heart.

LOOK, PERSONALLY, I GET IT.

Like most people in IT – I played with a lot of Lego growing up. I love learning, tinkering. My idea of fun on Christmas morning is getting a box of hardware and building my own home systems and labs. I end up on the receiving end of emails like this:

![clip_image001 clip_image001]()

For people like me – there’s an inherent resistance to the idea of no longer getting to tinker. But – there’s a freedom in passing that burden on to others so we can pursue places of new learning, new value (hint, hint, it’s up the stack silly!)

It’s funny the people who love to tinker say “but we’re doing it now, and we have the skill”. Yes, but the question is not “can you do it”, but “should you do it”.

That particular story ended up with them moving forward with VxRail.

They know that the buck stops with the VxRail team – not only to help them get going fast, but to do it over their project and their 6 year budget period, which will include multiple updates of the software and hardware stacks. At each point for the full duration – they won’t be responsible for all the testing, integration work, support, we will.

Now did they make that choice because vSAN is bad? Or PowerEdge is bad? NO.

Did they make that choice because the vSAN Ready Node program – which is no more than a refinement of the VMware vSphere HCL program to certify servers – didn’t work? In fact, I think Dell EMC and VMware have the best vSAN Redy Nodes – and the market is speaking, with us doing FAR more VxRail AND vSAN Ready Nodes than the compeition. That’s not me guessing. I know.

Nope – it’s none of those things that made the choice for them clear. They just got to mental clarity: what was killing them was all the stuff beyond the basics.

A lot of customers happily choose the DIY route. In fact, about 2/3 of the VMware vSAN customers choose the DIY route, and about 1/3 choose the turnkey system route.

When you choose DIY – you’re wasting your time compare the ingredients to the Consume choices – because often they are the SAME.

In the case of VxRail vs a vSAN Ready Node – there are multiple things that are not the same:

- There’s the value of integrated data protection ($35K of value per appliance).

- There’s the value of integrated hardware/software reporting/dial-home.

- There’s the value of integrated cloud storage and NAS ($10K of value per appliance).

- There’s the value of the 200+ steps that VxRail automates every time you add/change/remove/update nodes – any step in which you can screw yourself up.

All that said, the main difference in DIY vs. Consume is a shift in where you place value. When you chose Consume vs. DIY at any level of the stack, you’re choosing simplicity over flexibility, you’re choosing to elevate and shift skills higher up in the stack.

… And remember – as I needed to remind that customer – it’s not a “on time” thing. When you chose the DIY route, you’re actively choosing to keep configuring, testing, validating, integrating, and supporting said integration FOREVER.

We’ve quantified this value for VxRail – it’s around a 30% improvement vs. DIY. Read this:

![image image]()

At the strategic level, the most senior leaders of the company, VMware and Dell EMC agree – that while we must support all the ways customers will deploy – vSAN, vSAN Ready Node, VCF for DIY customers or VxRail/VxRack SDDC for customers who want to consume.

But we also agree – there is NO EASIER WAY than the simple “Consume” path of VxRail and VxRack SDDC. BTW – for Dell EMC readers, there’s short training video on this here internally.

Today – the realization that hit me – this is true at EVERY LEVEL of the stack, and there’s a pattern in this week’s announcements from Dell EMC at VMworld that reflects this. Here’s a generalized visualization:

![image image]()

Here’s a mapping of how it applies using the VMware and Dell EMC offers at every level:

![image image]()

Note that while I’ve put labels beside these that are (in the spirit of the week) VMware and Dell EMC – it’s notable that you can put all sorts of other examples on their. Azure Stack is an example of a “Consume your IaaS/PaaS”. Clearly SaaS examples like SFDC and others, while we may be powering them behind the scenes – our particpation is invisible to you as the customer.

Always remember – I do not place value judgement in this, both paths are valid, and the market has a lot of both.

BUT – I have an opinion. Every customer should carefully consider the burdens/optimization or “prioritization” at every level. In my experience – customers who are not “moving resources/time/money” up the stack are wasting resources/time/money.

This is a valid path for the ultimate DIY customer – pick your infrastructure by hand, build/test/validate/lifecycle the whole stack. Use VMware Validated Designs (VVDs) to reduce the risk as much as you can. Pick your own PaaS, CaaS – and enjoy putting it all together, and then maintaining it. Forever. Whether you call that person a “purist” or a “masochist” is a matter of opinion :-)

![image image]()

For the customer who choses simplicity, skills transformation, and opex reduction at the virtual pools of SDS/SDC (in the case of VxRail) and SDN (in the case of VxRack SDDC and VMware Cloud on AWS), but still wants flexibility, focus on existing skills and optionality, they would follow this path:

![image image]()

They would pick VxRail, VxRack, VxBlock or VMware Cloud on AWS – which from that point on isn’t their problem. They would then focus on either using VVDs to reduce risk or the VMware Ready System offers from Dell EMC and VMware – which narrow even further – only VxRack SDDC, and using the vRealize VVD and some further automation.

There are a ton of valid variations of this. Some customer choose to hand over the keys at the IaaS layer (that’s what EHC exists for).

![image image]()

I want to make this crazy real for you. If you want to understand just SOME (!!) of the decisions that if you DIY you need to take, that in consume path are taken care of for you, you HAVE to read this blog post- click on the below:

![image image]()

Ok – are you back? Now, think of the work that goes into not only the design, but then the automated install and upgrade of that whole stack, and ultimately the single support. It’s a huge amount of work that a customer can do (guided by a VVD) or have someone else do (EHC).

Some customers choose to hand over the keys at the PaaS level (that’s what NHC is for). Some choose to skip all the layers and just use SaaS. Some choose to skips some layers and just consume Public Cloud PaaS/CaaS/IaaS. Of course – the common path is a blend of many of these.

There’s one set of paths that are NOT allowed.

![image image]()

You cannot build a “consume path” on TOP of a “build path” – so once you cross from purple to blue – from then on up, it’s your problem, your stack, and you own the lifecycle management, integration/test, and support from that point on.

There’s also ONE BIG IMPORTANT thing to consider as we take the “big picture” view here, and also think over time.

The whole ecosystem (vendors, customers, partners, you name it) recognizes this pattern. Over time, we are all shifting to more and more purple. We’re all working to make the lifecycle management, integration/test of the layers get simpler, get more integrated. Want examples?

- Look at how VUM in vSAN 6.6.1 can get firmware updates and apply them. Simpler! Who owns the problem? It’s still you, but you can see how it’s making things easier for the DIY customer.

- You can see what VMware is doing around lifecycle management for VMware Cloud Foundation eventually extending up to vRealize. If it gets to a certain point, and someone takes on the full burden of lifecycle management, integration/test and support – well then the need for an EHC is reduced, and the VMware Ready System (currently a VVD on VxRack SDDC) changes color and becomes purple. Our GOAL, our STRATEGY is to take VxRack SDDC – and ultimately make it turnkey all the way up to the IaaS as VMware works on lifecycle management of their IaaS stack. It’s not there yet, but that’s the goal.

- The effort around PCF + Concourse, and Kubo (more on this later this week) are the basis of Pivotal Ready Systems (those stacks on VxRack SDDC). As we keep refining that, improving lifecycle management, ultimately it could cover the Native Hybrid Cloud use cases. Our GOAL, our STRATEGY is to take VxRack SDDC – and ultimately make it turnkey all the way up to the PaaS/CaaS layer as VMware and Pivotal work on PCF and Kubo. It’s not there yet, but that’s the goal.

But each of those examples is a journey, not an event. It’s the never-ending shift of value further and further up the stack.

Perhaps that’s the most important “meta” of them all – for us tinkerers – what we need to embrace is that as stuff gets more turnkey, more “purple” if we don’t let go and hand over that responsibility to others, it means we’re holding ourselves back.

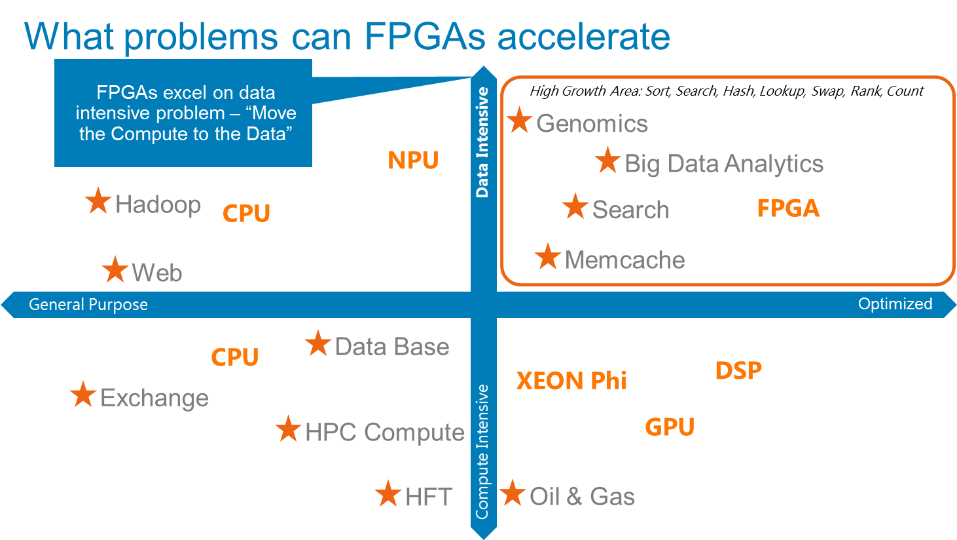

If you think about the link list pointer chasing problem shown to the left here– in a general purpose CPU when you need to traverse the link list every time you do a head/tail pointer fetch due to the data’s unstructured nature you get a cache miss, and thus, the CPU does a cache line fill – generally 8 datum’s. But only the head/tail pointer was needed, which means 7/8th’s of the memory bus bandwidth was wasted on unnecessary accesses – potentially blocking another CPU core from getting datum it needed. Therein lies a big problem for general purpose CPUs in some of these new problems face today.

If you think about the link list pointer chasing problem shown to the left here– in a general purpose CPU when you need to traverse the link list every time you do a head/tail pointer fetch due to the data’s unstructured nature you get a cache miss, and thus, the CPU does a cache line fill – generally 8 datum’s. But only the head/tail pointer was needed, which means 7/8th’s of the memory bus bandwidth was wasted on unnecessary accesses – potentially blocking another CPU core from getting datum it needed. Therein lies a big problem for general purpose CPUs in some of these new problems face today.

See you all there!

See you all there!

Virtualization in its simplest form is leveraging software to abstract the physical hardware from the guest operating system(s) that run on top of it. Whether we are using VMWare, XenServer, Hyper-V, or another hypervisor, from a conceptual standpoint they serve the same function.

Virtualization in its simplest form is leveraging software to abstract the physical hardware from the guest operating system(s) that run on top of it. Whether we are using VMWare, XenServer, Hyper-V, or another hypervisor, from a conceptual standpoint they serve the same function.

This digital badge validates that those who earn it know how to simplify IT operations and extend their VMware environments through a fully optimized hyper-converged Dell EMC VxRail Appliance.

This digital badge validates that those who earn it know how to simplify IT operations and extend their VMware environments through a fully optimized hyper-converged Dell EMC VxRail Appliance. Education Services will be offering

Education Services will be offering

We recognize our customers are in different states of IT readiness and priorities, which is why we offer a broad portfolio of options spanning complete turnkey hybrid cloud platforms to individual

We recognize our customers are in different states of IT readiness and priorities, which is why we offer a broad portfolio of options spanning complete turnkey hybrid cloud platforms to individual  With customized services from Dell EMC, we can help your organization assess and classify your applications and where they’re best suited to run – in the public, private cloud or on modernized infrastructure. With a thorough assessment you can determine which apps to retire, which to move to HCI and which should be refactored using agile, continuously delivery, and container-based architectures.

With customized services from Dell EMC, we can help your organization assess and classify your applications and where they’re best suited to run – in the public, private cloud or on modernized infrastructure. With a thorough assessment you can determine which apps to retire, which to move to HCI and which should be refactored using agile, continuously delivery, and container-based architectures.

If you’re attending VMworld in Las Vegas this week, be sure to visit the Dell EMC booth #400 for dozens of theater sessions, a meet-the-experts bar, and conversation stations. Additionally, Dell Technologies is featured in 31 sessions that cover topics from data protection, converged and hyper-converged infrastructure, to workload validated Ready Solutions and empowering the digital workspace. And don’t miss the expert-led and self-paced labs in the VMworld Hands-on-Lab area. Here you’ll find a Dell EMC lab on Getting Started with VxRail among hundreds of other labs and certifications.

If you’re attending VMworld in Las Vegas this week, be sure to visit the Dell EMC booth #400 for dozens of theater sessions, a meet-the-experts bar, and conversation stations. Additionally, Dell Technologies is featured in 31 sessions that cover topics from data protection, converged and hyper-converged infrastructure, to workload validated Ready Solutions and empowering the digital workspace. And don’t miss the expert-led and self-paced labs in the VMworld Hands-on-Lab area. Here you’ll find a Dell EMC lab on Getting Started with VxRail among hundreds of other labs and certifications.